Photo by Jelleke Vanooteghem on Unsplash

The use cases of Artificial Intelligence are increasing year after year. To deliver the much-required performance, the silicon technology platform is critical. The computing industry has developed different types of AI-inspired applications and is now looking for a perfect silicon architecture that can cater to different application scenarios.

In this process, the semiconductor industry has provided the silicon platforms like GPUs, TPUs, NPUs, and AIUs. The common trait across these silicon platforms has been the internals on how different data processing occurs. Eventually, the AI applications require high throughput to ensure the time taken to perform inference and training is the smallest possible.

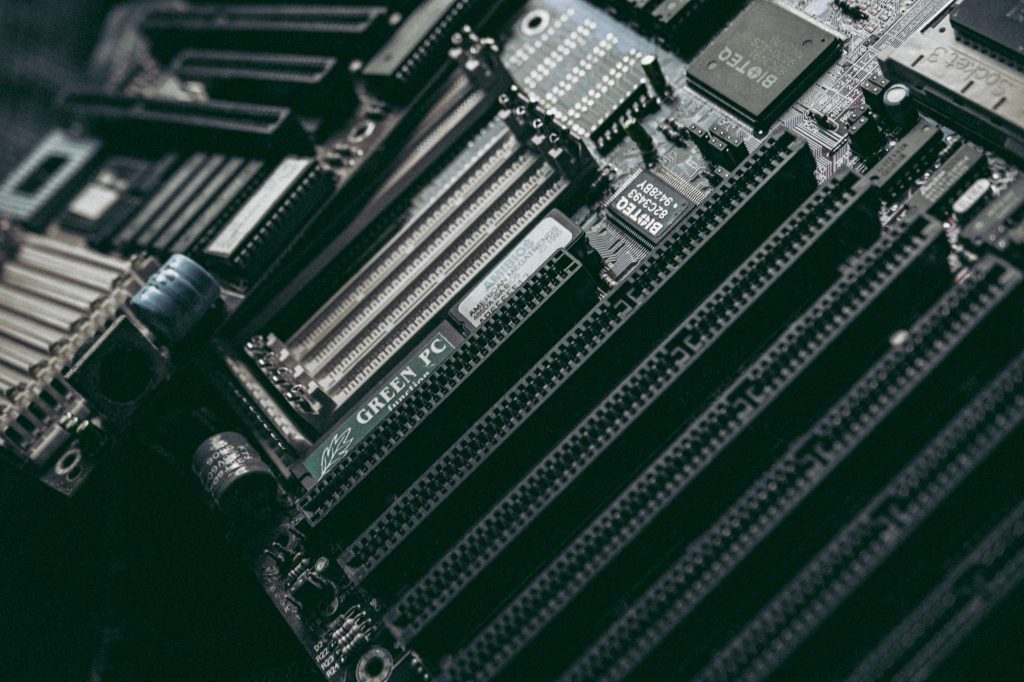

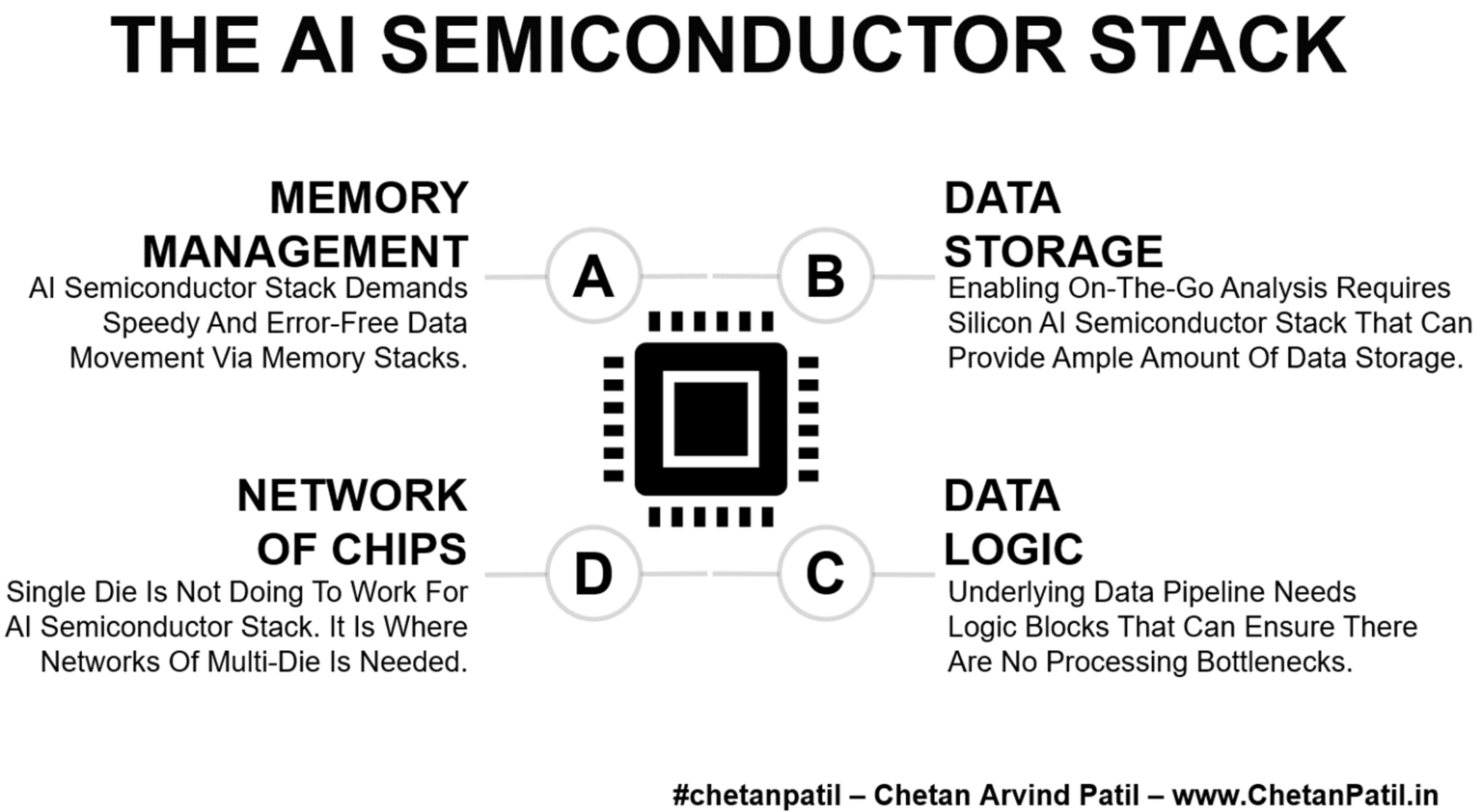

Memory Management: AI Semiconductor Stack Demands Speedy And Error-Free Data Movement Via Memory Stacks.

Data Storage: Enabling On-The-Go Analysis Requires Silicon AI Semiconductor Stack That Can Provide Ample Amount Of Data Storage.

The internals of AI Stack for semiconductor solutions requires near-perfect memory management. The key to handling a large data set is memory. The silicon architecture has to ensure that the AI application is not running into memory bottlenecks. If they do, the silicon architecture will slow down the AI application and eventually harms the customer experience.

Silicon architecture also has to ensure that data management is not a bottleneck. It requires an interaction between the upper-level memories (caches) with the lower-level (disk)memories. The penalty of slow processing across these two levels is on processing time, which is a critical component of AI applications.

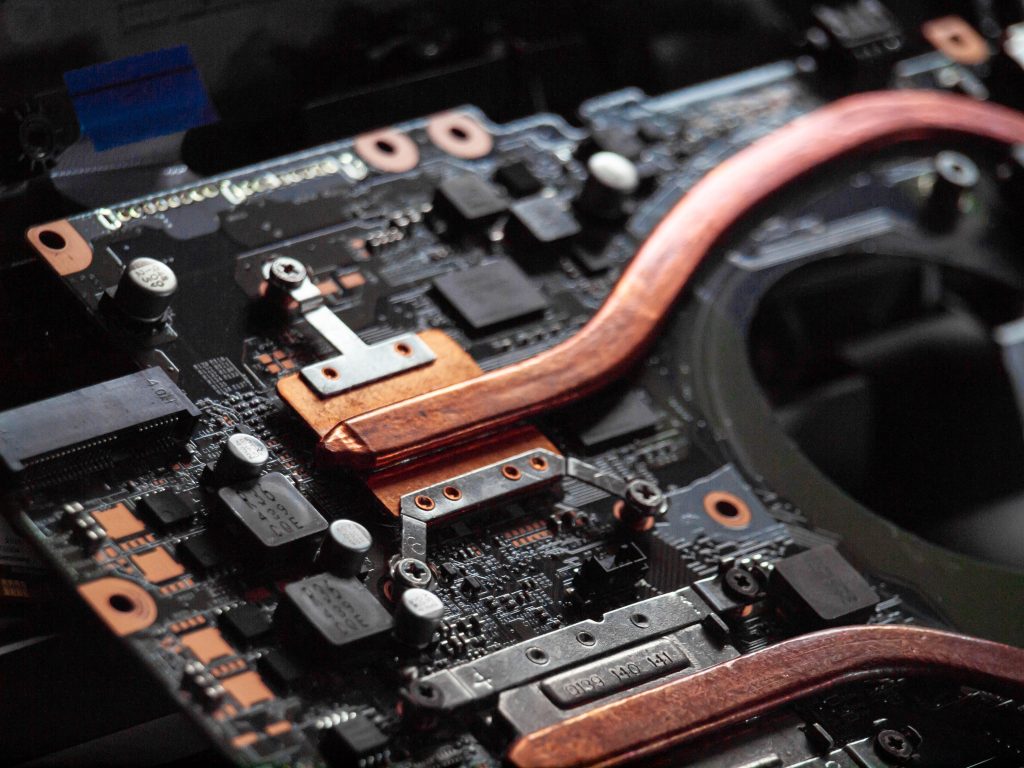

As the application of AI grows further, the computing power will have to also increase with it. Thus, on top of the memory management and data movement, the two other critical components are data logic and network-on-a-chip.

Data logic ensures the processing aspect is error-free, thus no bottlenecks when interacting with different sub-blocks. It is a critical requirement of high-performance processors and an area that academic and industry researchers have continuously worked to enhance.

Data Logic: Underlying Data Pipeline Needs Logic Blocks That Can Ensure There Are No Processing Bottlenecks.

Network Of Chips: Single Die Is Not Doing To Work For AI Semiconductor Stack. It Is Where Networks Of Multi-Die Is Needed.

Apart from all the technical considerations, the last part of the AI Semiconductor Stack is cost. Eventually, the silicon solution deployed to handle the AI applications should be cost-friendly. It means the cost of processing per bit does not negatively impact the business side of the AI use case.

The computing industry will keep launching novel uses cases for AI applications. Today, it is ChatGPT. Tomorrow it will be something else. Eventually, the underlying silicon architecture will have to cater to all the changing requirements and thus will have to ensure all the AI Semiconductor Stacks are technically robust and business-wise budget-friendly.