Image Generated Using Nano Banana

Data Center Networking Became A Silicon Problem

Data center networking has moved from a background enabler to a key driver of performance. In cloud and AI environments, network speed and reliability directly affect application latency, accelerator usage, storage throughput, and cost per workload.

As clusters expand, the network evolves from a minor role to a system-level bottleneck. At a small scale, inefficiencies go unnoticed. At a large scale, even slight latency spikes, bandwidth limits, or congestion can idle expensive compute, leaving GPUs or CPUs waiting on data transfers.

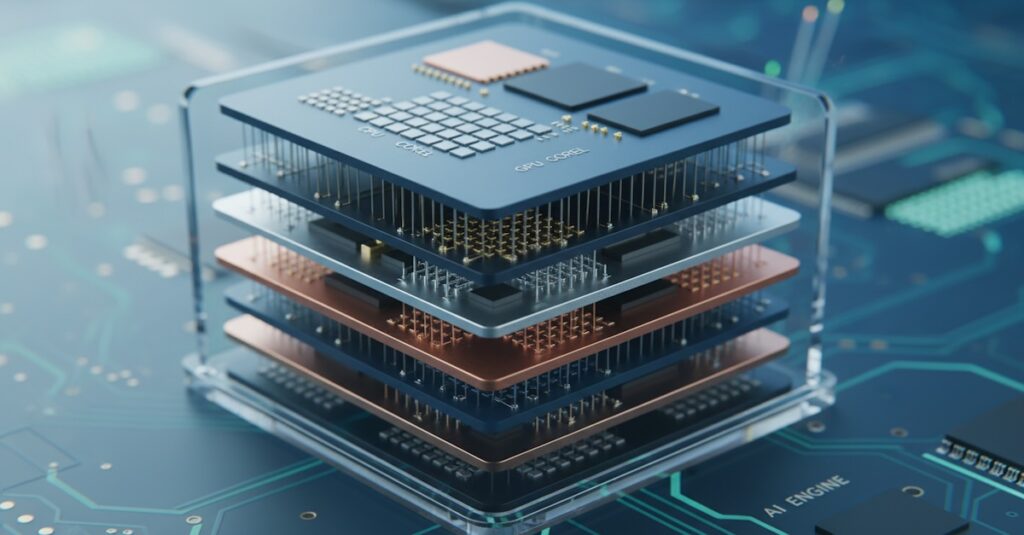

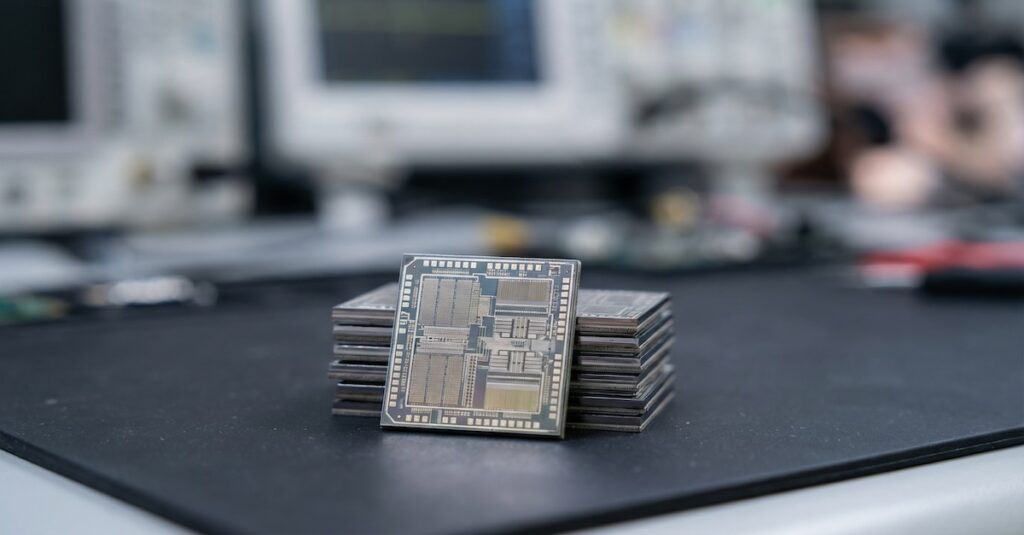

Modern networking advances are now propelled by semiconductor breakthroughs. Faster, more stable data movement relies less on legacy design and more on cutting-edge high-speed silicon: custom ASICs, NICs, SerDes, retimers, and the supporting power and timing architectures.

Meanwhile, networking progress is constrained by physical limits. Signal integrity, packaging density, power delivery, and thermal management set the upper bound for reliable bandwidth at scale. Today’s data center networks increasingly depend on semiconductors that can deliver high throughput and low latency within practical power and cooling limits.

Networks Are Being Redesigned For AI Scale

The shift from traditional enterprise traffic to cloud-native services and AI workloads has reshaped data center communication. Instead of mostly north-south flows between users and servers, modern environments see heavier east-west traffic where compute, storage, and services constantly exchange data. This increases pressure on switching capacity, congestion control, and latency consistency.

AI training further intensifies the challenge. Distributed workloads rely on frequent synchronization across many accelerators, so even small network delays can reduce GPU utilization. As clusters grow, networks must handle more simultaneous flows and higher-bandwidth collective operations while remaining reliable.

As a result, data center networks are no longer built just for connectivity. They are engineered for predictable performance under sustained load, behaving more like a controlled system component than a best effort transport layer.

Building Blocks That Define Modern Networking

Modern data center networking is increasingly limited by physics. As link speeds rise, performance depends less on traditional network design and more on semiconductor capabilities such as high speed signaling, power efficiency, and thermal stability.

Custom ASICs and advanced SerDes enable higher bandwidth per port while maintaining signal integrity. At scale, reliability and predictable behavior also become silicon-driven, requiring strong error correction, telemetry, and stable operation under congestion and load.

| Data Center Networking Need | Semiconductor Foundation |

|---|---|

| Higher link bandwidth | Advanced high-speed data transfer techniques, signaling, equalization, clocking design |

| Low and predictable latency at scale | Efficient switch ASIC pipelines, cut through forwarding, optimized buffering |

| Scaling without power blowup | Power efficient switch ASICs, better voltage regulation, thermal aware design |

| Higher reliability under heavy traffic | Error detection, improved silicon margins |

| More ports and density per rack | Advanced packaging, high layer substrates, thermal co-design |

A key transition ahead is deeper optical adoption. Electrical links work well over short distances, but higher bandwidth and longer reach push power and signal integrity limits, making optics and packaging integration a growing differentiator.

Means For The Future Of Data Center Infrastructure

Data center networking is certainly becoming a platform decision, not just a wiring decision.

As AI clusters grow, networks are judged by how well they keep accelerators busy. Networks are also judged by how consistently they deliver bandwidth and move data per watt. This shifts the focus away from peak link speed alone and toward sustained performance under real congestion and synchronization patterns.

For the computing industry, this means infrastructure roadmaps will be shaped by semiconductor constraints and breakthroughs. Power delivery, thermals, signal integrity, and packaging density will set the limits. These factors determine what network architectures can scale cleanly.

As a result, future data centers will place greater emphasis on tightly integrated stacks. These stacks will combine switch silicon, NICs or DPUs, optics, and system software into a coordinated design.

The key takeaway is simple. Next-generation networking will not be defined only by racks and cables. Semiconductor technologies will define bandwidth that is predictable, scalable, and energy-efficient at AI scale.