Image Generated Using Nano Banana

Origins of Compute Architecture

The earliest computer architectures were defined by scarcity. Transistors were costly, memory was limited, and performance expectations were modest. Systems relied on single-core, sequential execution where correctness mattered more than scale.

Instruction sets were closely matched to the hardware, and software was written with detailed knowledge of the machine’s behavior. Architecture centered on basic arithmetic logic, simple control flow, and minimal memory structures.

As scaling improved, performance gains came from higher clock frequencies rather than new programming models. Pipelining, instruction-level parallelism, branch prediction, and out-of-order execution allowed more work per cycle. This approach eventually hit power and thermal limits, while memory latency lagged behind improvements in logic.

These constraints forced a shift in architectural thinking, from optimizing a single core to managing parallelism, memory locality, and overall system balance, setting the stage for multicore and heterogeneous designs.

The Shift To Parallelism And Heterogeneous Computing

When single-core scaling stalled, parallelism became the primary path to performance growth. Multi-core processors extended scaling within power limits by replicating simpler cores and relying on software to exploit concurrency.

This fundamentally changed the hardware-software relationship, as performance gains now depended on how well applications and operating systems could scale across multiple execution contexts.

As systems grew more parallel, memory hierarchy and communication became central architectural challenges. Shared caches, coherence protocols, and interconnects introduced new trade-offs between latency and power. At the same time, it became clear that general-purpose cores were inefficient for many workloads. GPUs and other accelerators showed that specialized, throughput-oriented architectures could deliver far higher efficiency.

All this led to heterogeneous systems where CPUs orchestrate control while accelerators handle dense parallel computation, reshaping modern compute architecture.

Architectural Comparison Across Eras

The evolution of computer architecture can be understood by comparing dominant design approaches across different eras. Architecture has consistently evolved in response to shifting bottlenecks: limited hardware resources and transistor availability constrained early systems. As scaling progressed, power density and memory latency emerged as primary limits. In modern systems, data movement and energy efficiency have become more restrictive than raw compute capability.

| Era | Primary Compute Model | Key Optimization Focus | Memory Relationship | Dominant Constraints |

|---|---|---|---|---|

| Early Single Core | Sequential execution | Functional correctness and frequency | Simple, flat memory | Transistor cost |

| Frequency Scaling Era | Superscalar single core | Instruction level parallelism | Growing cache hierarchies | Power density |

| Multi Core Era | Thread level parallelism | Core replication and coherence | Shared memory systems | Software scalability |

| Accelerator Era | Heterogeneous compute | Throughput and specialization | Explicit data movement | Programming complexity |

| AI Focused Era | Domain specific architectures | Dataflow and energy efficiency | Memory near compute | Data movement cost |

A key theme across this evolution is the changing role of memory. Initially, memory functioned as a passive, uniformly accessed resource. Over time, it evolved into a hierarchical, distributed structure that directly affects performance and efficiency. In AI-oriented architectures, where workloads are data-intensive, memory placement, bandwidth, and locality often outweigh peak compute throughput in determining system effectiveness.

These transitions reveal a broader architectural pattern. Progress occurs when existing abstractions no longer match workload demands. Each new era introduces mechanisms to address emerging constraints while inheriting complexity from earlier designs. As a result, compute architecture becomes less about isolated components and more about managing tradeoffs across the entire system.

Modern Compute Architecture In The AI Era

AI-driven modern compute architectures are shaped by data-intensive workloads such as machine learning, analytics, and real-time inference. These workloads reveal that data movement, not computation, is the dominant source of energy and performance loss. As a result, architecture now emphasizes locality, massive parallelism, and specialization.

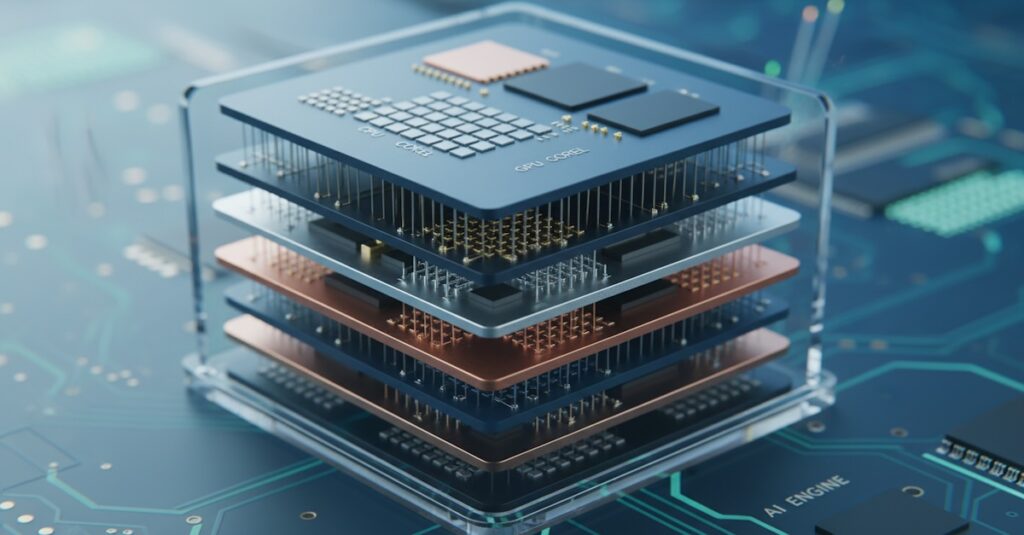

Dataflow-oriented designs, tensor accelerators, and matrix engines align computation directly with data movement. At the same time, chiplet-based systems enable modular scaling and faster architectural iteration, albeit with new challenges in interconnects and system integration.

Looking ahead, compute architecture will be defined by co-design across hardware, software, and workloads. Architects must account for algorithms, data characteristics, and deployment environments as a unified system.

In the AI era, architecture has become a strategic layer that determines scalability and efficiency. Sustained progress will come not from isolated optimizations, but from rethinking how computation, data, and energy interact across the entire computing stack.